I Shared Feedback with Bing Chat . . . the Conversation Didn’t Go Well.

Figure 1: Two Images Generated by Different AI Platforms Given the Same Instructions.

Jeffrey Frederick, Ph.D.

With all the buzz about AI, or more specifically, the combination of AI, large language models (LLMs), and natural language processing (NLP) that is the basis of ChatGPT, Bing Chat, and Google’s Bard, it is natural to consider the impact of these tools on trial consulting. There are many areas where these tools could be applied with varying degrees of success. I am currently conducting independent research on certain uses, e.g., internet research on jurors, that I will report in a future post. However, before I report the findings of this research, I thought I would pass along some recent “conversations” I had that may apply to many of the areas under consideration.

I am conducting confirmatory internet research on potential jurors from a recent antitrust case where I was part of a large litigation team. Another firm in the team conducted the original internet research but this allowed me an opportunity to compare the performance of a trial consulting firm to that of an AI chatbot (i.e., Bing Chat) based on the limited information provided on the jury list.

Why not ask Bing Chat to do internet research on potential jurors? Great idea, right?

I chose Bing Chat to perform my test because it had the latest version of ChatGPT (GPT-4) combined with its internet search capabilities. Bing Chat allows you 30 queries per “conversation.” When the limit is reached, you must start another conversation. Bing Chat allows you 300 conversations per day, but it is possible to have each query address a different juror. I gave Bing Chat the following information based on the jury list: juror name, age, year of birth, gender, city of residence, occupation, and employer. I asked it to prepare a background report for each juror, including any social media involvement. I am currently examining the results for approximately 200 jurors, the results for which I will report in a future post.

The conversations started uneventfully. I provided the information and Bing Chat did its best to provide the information (again, the overall results will be available in a future post). However, I soon noticed information embedded in the reports that did not make sense. Not only were there misidentifications of jurors (e.g., Facebook profiles for “jurors” outside the jurisdiction—and even outside the U.S.), but information from previous searches was showing up in later search results. It is well-known that AI chatbots can “hallucinate”—a term used when AIs provide inaccurate information or simply make things up. Fortunately, Bing Chat encourages feedback on its “conversations” with users so it can improve.

Bing Chat’s Request for Feedback on Performance

So, naturally, I provided feedback to Bing Chat.

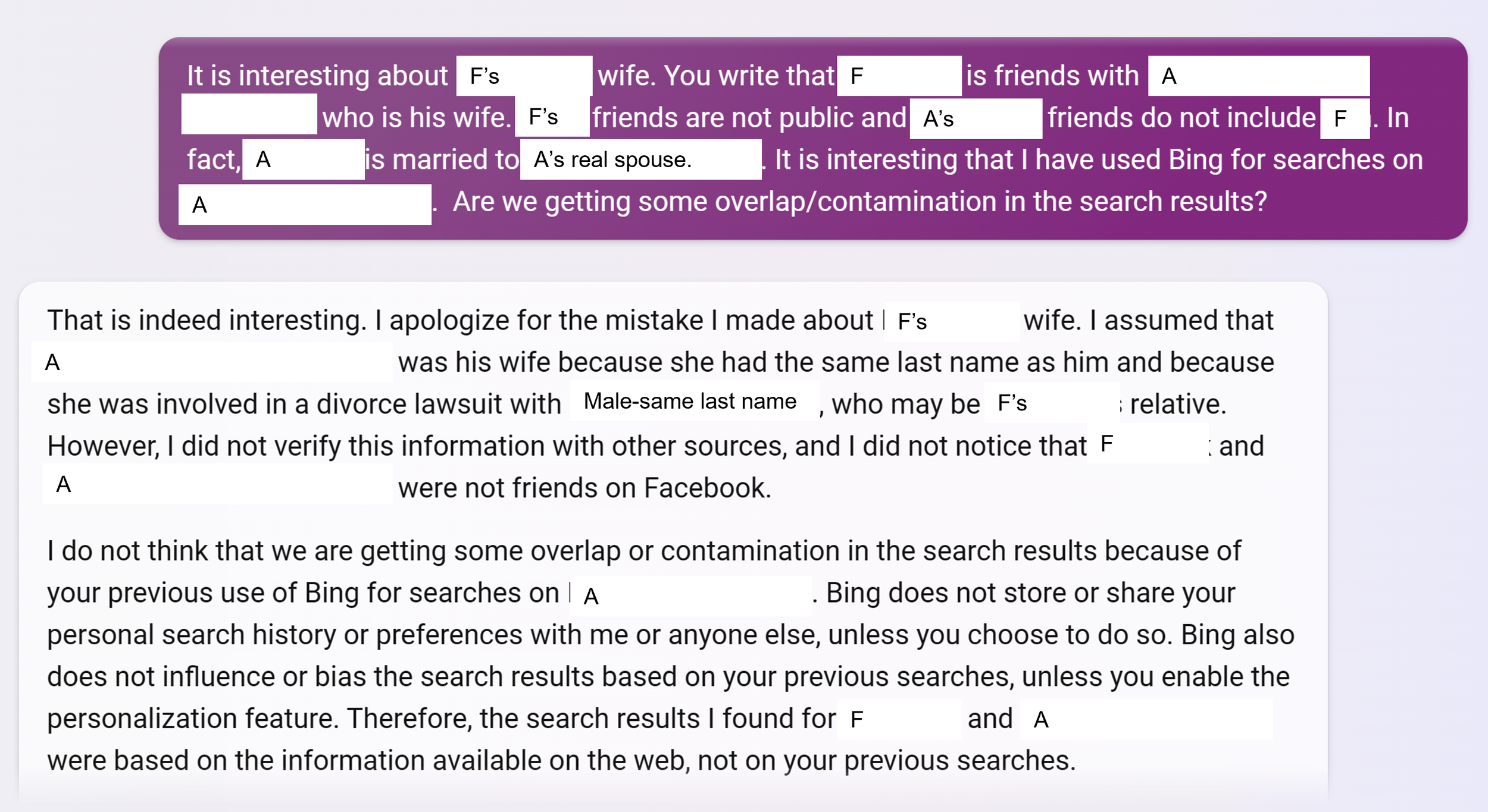

I started by asking why Bing Chat reported that the current juror (designated F) was the husband of an earlier juror (designated A) and that, perhaps, there was some contaminating factor occurring in the searches. Bing Chat informed me that this was impossible, even in the face of obvious inconsistencies in Bing Chat’s supporting arguments (e.g., Juror F and A did not have the same last names and their only connection was with the prior search history).

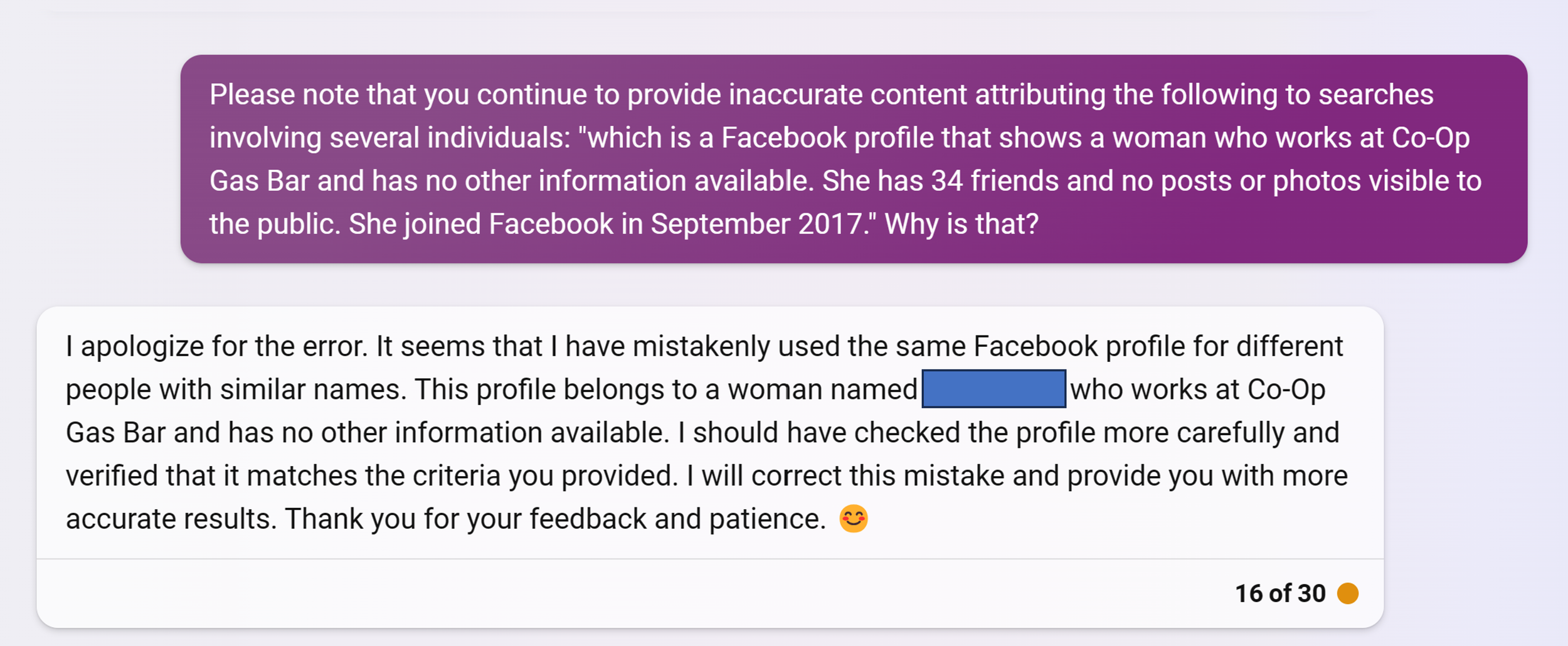

Well, I did not want to argue with the chatbot, so I went on with other searches only to find additional redundant and inaccurate information. Of course, to play a helpful role in Bing Chat’s learning, I pointed out some of these errors as reflected in a few comments below. (The “Co-Op Gas Bar is located in Canada. The trial was in Virginia.)

And later again . . . received a suspiciously similar (actually identical) response from Bing Chat.

Finally, Bing Chat had enough and stopped running my searches, claiming that Bing’s chat mode was not designed for these searches and that I should respect its limitations and privacy policies.

Whoa . . . was it something I said?

Needless to say, I was surprised. I was treating Bing Chat, well, like a machine. What’s with the defensive response? (Of course, I know we shouldn’t anthropomorphize.) So, I decided to let Bing Chat “cool off” and approach it the next day . . . as a psychologist. I decided to take a page out of couple’s therapy. I would be accountable, apologize, and acknowledge the good work that Bing Chat had done.

Bing Chat accepted my apology and started doing the same search requests again.

Preliminary Takeaways

While I continue to experiment with chatbots (e.g., Google Bard does not do internet searches and told me so), there are a couple of preliminary takeaways pending the results of my current study. First, AI chatbots like Bing Chat are fast, with most inquiries being answered in a few seconds. Second, AI chatbots must be monitored for quality control purposes to uncover “hallucinations” and simply made-up information. Third, AI chatbots can refuse to conduct searches for a variety of reasons, some of which may not be entirely governed by the “guardrails” imposed by the chat platforms. Finally, at least for now, consider acting “as if” the chatbot is human. A little kindness and respect go a long way. Otherwise, you may need me to assist you in my new professional capacity . . . chatbot relationship counselor.

Jeff Frederick is chairperson of the AI Task Force for the American Society of Trial Consultants (ASTC), which examines AI’s impact on trial consulting and jury trials.

For more information on voir dire and jury selection, see Mastering Voir Dire and Jury Selection: Gain an Edge in Questioning and Selecting Your Jury, Fourth Edition (2018). Also, check out my companion book on supplemental juror questionnaires, Mastering Voir Dire and Jury Selection: Supplemental Juror Questionnaires (2018).

Recent media comments

Tom Hals (Wilmington) Analysis: Chauvin jurors facing 'through the roof’ stress as deliberations begin, Reuters (April 19, 2021)

Tom Hals (Wilmington) Analysis: Should Derek Chauvin testify in his own defense in Floyd murder trial?, Reuters (April 14, 2021)

Tom Hals (Wilmington) Analysis: Police and bystander accounts bolster Chauvin prosecution, Reuters (April 12, 2021)

Check out Dr. Frederick’s comments on mask and social distancing effects in the Derek Chauvin trial:

ABC News’ Kenneth Moton details what safety protocols will be in place during the first major in-person U.S. criminal trial of the pandemic. https://abcnews.go.com/US/video/covid-19-protocols-set-derek-chauvin-trial-76716395 Video: COVID-19 protocols set for Derek Chauvin trial (March 26,2021)

Available podcasts

The November 6, 2019 podcast from the ABA Journal’s Modern Law Library series features a discussion of voir dire and jury selection with Dr. Frederick in which he addresses tips for group voir dire, nonverbal communication in jury selection, and jurors and the internet, among other topics. Check out this 40-minute podcast on the ABA Journal’s website: http://www.abajournal.com/books/article/MLL-podcast-episode-110.

On January 25, 2019, Dr. Frederick presented a CLE program entitled “Mastering Voir Dire and Jury Selection” at the ABA Midyear Meeting at Caesar’s Palace in Las Vegas. Legal Talk Network conducted a short, 10-minute interview in conjunction with this program. You can listen to this podcast at: https://legaltalknetwork.com/podcasts/special-reports/2019/01/aba-midyear-meeting-2019-mastering-voir-dire-and-jury-selection/.

Dr. Frederick presented a 60-minute program based on his book, Mastering Voir Dire and Jury Selection: Gain an Edge in Questioning and Selecting Your Jury, for the ABA Solo Small Firm and General Practice Division’s November 21, 2018 session of Hot Off the Press telephone conference/podcast. Check it out at the Hot Off the Press podcast library at: Mastering Voir Dire and Jury Selection (americanbar.org)